Since OpenAI released their ChatGPT Turbo & Whisper API’s a few days ago, there’s a whole bunch of voice > Whisper > ChatGPT > TTS demos, so I had to do one too.

Though to make this slightly different, this uses Wake Word and Voice Activity Detection using PicoVoice, so it’s kinda like an Alexa.. ish..?

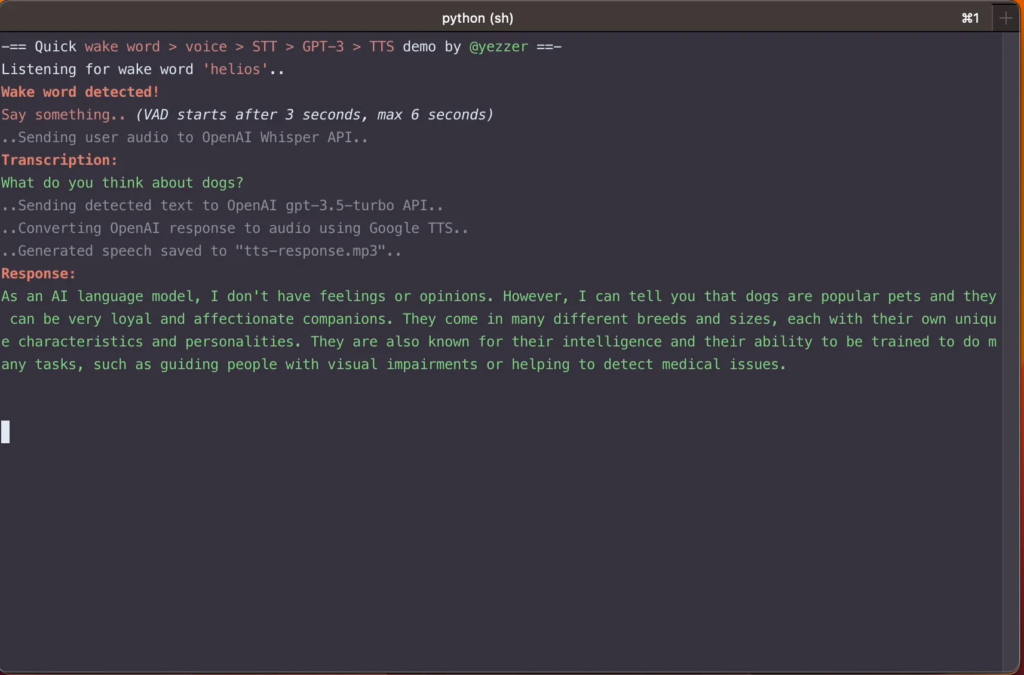

The flow is: App starts > wait for the wake word (Helios — you can train your own wake words) > start recording the mic, and use Cobra VAD to detect when the user stops speaking, or wait a max of 6 seconds > save audio to file > send to Whisper API > get text back > send to ChatGPT API > get the response back > send it to Google Cloud Text To Speech API (en-GB-Wavenet-B) > get the MP3 back > play it via mpg321.

Only a few hours playing about with the APIs, and really fun!